Elon Musk’s DOGE has introduced some novel mental states into various parts of the American polity. Among its supporters, one finds both triumphalism and confusion. Among its opponents, one finds outrage, or sadness, or alarm, or all of these things combined, and confusion. There’s confusion on both sides because everyone is trying to figure out whether Donald Trump and Musk are using DOGE to balance the federal budget, or to reduce regulations for businesses, or to increase efficiency in government operations, or to attack Trump’s enemies. In any case, DOGE has been a huge deal. The sudden speed of its unambivalent bloodletting is unlike anything anyone’s ever seen before. It must be the most audacious and consequential governmental undertaking in American history that no one knows the point of.

The idea that it’s meant to cut into the budget deficit sits uneasily with the fact that personnel spending accounts for a very small portion of the federal budget, and that Trump and the Republican Congress are readying a tax cut that would add almost five-trillion dollars to the deficit over the next nine years. That it’s an attack on Trump’s enemies doesn’t fit with its speedy assault on the National Park Service and the National Weather Service. Trump has never beefed with park rangers or meteorologists, that I know, nor they with him. If it’s going after the entrenchment or stale expertise of ageing bureaucrats, why is it erasing whole classes of new government employees?

Early on, when some suspicious NGO contracts attached to the US Agency for International Development came to light, I experienced a passing moment of both orientation and reassurance. So this was what they were doing, severing the money-link between the US Treasury and the network of extremist NGOs that have deformed policymaking in both the federal bureaucracy and the Democratic Party. This was a DOGE action that made sense. But then, as DOGE went about firing park rangers and weather researchers, I joined a lot of other people in being disoriented again.

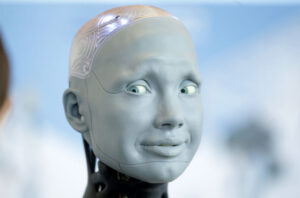

Then, recently, I heard a different description of the real vision guiding DOGE that is alarming in its own way but also reassuring in that it kind of matches up with the otherwise confusing moves we’re seeing. This description comes via the New York Times’s Ezra Klein, who asked “a lot of people involved in the Trump administration” what DOGE is all about. “I’ve been surprised how many [of those] people understand… what Trump and Musk and DOGE are doing… as related to AI,” Klein related in a recent podcast. “What they basically say is: The federal government is too cumbersome to take advantage of AI as a technology [and] needs to be stripped down and rebuilt to take advantage of AI… [T]he dismantling of the government allows for a creative destruction that paves the way for the government to better use AI.” This AI connection becomes both weirder and more plausible when people — among them the excellent Nicholas Carr, one of the most humane and prescient writers on digital technology — also suggest that DOGE is hoovering up government data to feed into an AI that Musk is building. (The Trump administration denies this, for what that’s worth.)

It’s fair to assume that Alex Karp, the billionaire co-author of the new book The Technological Republic, views DOGE in these AI terms, and affirms its mission. Karp is a co-founder and current CEO of Palantir Technologies, which earns much of its revenue from selling software to the Pentagon, and in early March, his book debuted at Number One on the New York Times bestseller list. With Musk waving a chainsaw in the White House, and this book forcing the other bestsellers to submit to its superior will, we seem to be in a spooky, futuristic moment where the masters of Silicon Valley are escaping their market sectors to rule all the other domains as well.

Karp is impressively educated, with a Bachelor’s degree from Stanford and a PhD from Goethe University in Frankfurt, Germany — the PhD in a smart-sounding discipline called “Neoclassical Social Theory”. (For what it’s worth, “Neoclassical Social Theory” seems to be a synonym for “old-fashioned sociology”.) Since Karp has those fancy degrees in those fancy disciplines, and since his book had been described as a successor to Allan Bloom’s The Closing of the American Mind, I was eager to give it a read, excited that another deeply erudite and fairly well-written book of ideas had somehow made it to the top of the bestseller lists. Alas, The Technological Republic is dully written, and its erudition suggests Wikipedia research rather than doctoral degrees in neoclassical things. That is, Karp’s chapters typically grow from The Most Famous Study, and his research consistently yields The Most Obvious Reference. You get the Milgram Experiment and Dunbar’s Number. If you turn a page and glimpse the name “Isaiah Berlin”, you know you’ll soon be reading of foxes and hedgehogs. If you catch “William F. Buckley” out of the corner of your eye, you can confidently guess that a reference to the Boston phonebook is just up ahead. Alan Greenspan? Place a giddy bet on “irrational exuberance”.

Somehow, the blandness and familiarity of the book’s contents only reinforced my sense that it emanates from some unbounded power that rules us all. How else to explain its immediate conquest of the bestseller lists? Given what I know about Karp’s education and professional success, my assumption was that he must be smarter and have better taste than this book indicates. I began to suspect that The Technological Republic is mediocre on purpose. But why? To what purpose? One might chalk up its dullness and shallowness to simple laziness, but, if a lazy effort’s all you had the time and energy for, why make it at all? It’s not like the earnings from a book are going to matter to a billionaire. You might rather guess that he wrote this book because he had something big to get off his chest. But if so, you’d expect a real effort, something that made him look like a serious and distinctive thinker rather than the median TED-talker, assuming he can tell the difference. And my various theories about him force me to assume he can tell the difference.

So next I thought that maybe it’s an example of “esoteric writing”, that hidden within its lines of humdrum text is a secret subtext of lesser banality, decodable only by select readers. Then — in an eerie coincidence — not long after I indulged this paranoid thought, I reached the passage where Karp discusses Leo Strauss, the German-Jewish philosopher who made esoteric writing and reading a preoccupation of several generations of Straussian academics. This coincidence pointed me to a couple of others. Allan Bloom was Leo Strauss’s most famous student, and the person who compared Alex Karp to Allan Bloom was George Will, the conservative columnist for the Washington Post, whose lawyer son started working for Karp’s company a few months before Karp’s book came out. Is it too cynical or paranoid to imagine a powerful tech company hiring a young lawyer to get his famous columnist father to write an op-ed about the company’s CEO’s upcoming book? It would have been for me, before Musk came along to (reportedly) data-mine the US government as he shrinks it for better digestion by his AI, and before a Palantir executive placed an unserious book atop the New York Times bestseller list. In other words, recent developments have made my usual lens of polite scepticism feel like a weak heuristic.

Another thing about the book that left me somewhere between slightly suspicious and outright paranoid was its vagueness about both its real subject and the real object of its critique. Read as a whole, with special attention to its preface, its second chapter, and the final sentence of the final paragraph of the final chapter, the book seems to be about AI, and, specifically, how important it is for America to master AI for its geopolitical competition with China. But beyond those early parts and that final paragraph, there are almost no references to AI. There’s no description of what AI-based competition with China would look like, or why it would be terrible for the US to lose or just fall behind in that competition, or whether the scarier AI scenarios are or are not well-grounded.

Karp’s chapter on AI does take up one common worry about it, but only to dismiss it in terms so blithe they left me (again) a little suspicious about his seriousness. “[H]ow,” he asks, “will humanity react when the… quintessentially human domains of art, humor, and literature come under assault?” As a writer, I view this prospect with a certain gloom, I think understandably. But Karp says we should drop our vain worries and anticipate the coming artistic and literary superiority of AI as a time of “collaboration between two species of intelligence”. Besides, he says, having AI overtake us in these creative domains “may even relieve us of the need to define our worth and sense of self in this world solely through production and output”.

This is what I’m talking about! I simply can’t believe he believes that we artists and writers might reasonably view our obsolescence at the metaphorical (so far) hands of AI as some sort of liberation from the grim imperatives of “production” and “output”. I think he’s just saying that. Why? I don’t think he’s being dishonest, exactly. I think he just wants to regulate consideration of the topic, to contain the unruly and depressing philosophical discussions that haunt AI.

Karp signals this same desire, to keep everyone from getting hung up on the deeper questions about AI, in his treatment of the people best known for asking the deeper questions about AI. After his breezy dismissal of artists’ and writers’ concerns about their obsolescence, he devotes barely two pages to the arguments of AI “doomers” such as Eliezer Yudkowsky, and then, having conceded their existence, he ignores their more serious points. Instead, he executes a sort of bait-and-switch, tweaking the AI “alignment” crowd for their wokeness, their silly “policing [of] the wording and tone that chatbots use”, while ignoring their darker, more defining predictions about malevolent AI taking over the world and killing everyone. (Apocalyptic worries about AI, he assures us at another point, have repeatedly been shown to be “premature”.)

A closely related bait-and-switch operates throughout the book. We’re given a history of the tech business that moves from the early, patriotic collaboration of engineers and generals that helped defeat the Nazis and the Japanese to the hippyish decadence and woke self-righteousness of later engineers, which culminates in a 2019 rebellion by Microsoft workers after their company took a contract to work with the US Army (and Microsoft knuckled under). This is fair enough. That precious moralism is weak and vain. But the silly, decadent, antipatriotic posturing that he returns to throughout the book is a strawman. The more serious objection to Karp’s programme of arming the Pentagon with AI doesn’t come from Leftist distaste for patriotism or woke worries about insensitive language in chatbots. It comes from people who think weaponising AI is really dangerous.

He doesn’t give these worries any real airtime, but you know what his response to them would be if he did, since it’s the response to his straw-man version of them: China, the spectre of China striding ahead in an AI arms race. Now, the spectre of China does concentrate the mind — China invading Taiwan and hoarding the chips that drive AI, a China-AI hegemon running a protection racket across the whole Pacific, if not the whole world. But if the alternative to this scenario is a decades-long arms race of autonomous non-human agents of increasing capacities whose future inclinations we can neither predict nor, perhaps, control, then we at least need to sit down and talk about which of these options is less bad. You expect a book that is (tacitly) about why the American AI business needs to align itself with the Pentagon — by the CEO of a tech company that has happily aligned itself with the Pentagon — to take up this discussion. Its absence in such a book, the sidelining and strawmanning of the most prominent figures trying to push this discussion, is (again) suspicious. It makes you think Karp’s actual project is not using rational argument to rebut his readers’ readiest fears, but instead to employ a sort of rhetorical mood-management on them, to calm those fears through cagey messaging.

Then again, maybe the lesson in all this is that argument or rational persuasion or democratic deliberation doesn’t matter anyway, anymore. Maybe what Karp is saying in his cagey way is that, with the irresistible forward momentum of the technology and the inescapable arms race in which it figures so centrally, we no longer have a choice in the matter. We’re going where it’s taking us, however we quibble about existential risk and assorted other existential bummers. We might as well be the ones who reach that unspeakable future first. And so perhaps the subtextual point of Karp’s book is to make us feel a little less bad about these inevitabilities.

That interpretation makes sense, given other things in this book. Much of The Technological Republic is devoted to showing that humans and their institutions are no longer suited for life at the highest levels of competition — in business or geopolitics. It is a sort of anthropology that portrays people as inherently conformist, dogmatic, and status-minded, which generally makes the organisations they inhabit hopelessly slow and glitchy, governed more by petty social imperatives than by the purposes and needs of their organisations. People protect their turf instead of inviting creative collaboration. They waste valuable hours staging useless meetings to preen in front of their underlings. They stay safely within their narrow job descriptions when there’s needful work to be done just outside of them.

As counterexamples to these tendencies, and as hints at his broader view of the future of business and government, Karp offers two social formations that do things better. One is companies that are still led by their founders. A founder-leader, embodying the original mission of the business with his physical presence and personal influence, keeps employees concentrated on this mission in a way that disciplines their innate tendencies toward dogmatism, conformism, and status-mongering.

The other counterexample is swarms of bees, which are amazing to the Silicon Valley CEO because of how innovative and pragmatic they are. Individual worker bees are consumed with the hive-mission of finding food and shelter but untethered to predefined roles and undistracted by concerns about what other bees think of them. Bees don’t do hierarchy or conformity or turf-protection. They don’t get stuck on old ideas. They eagerly seek out new possibilities. They give suggestions fearlessly, based on what they’ve found out there on the edges of the swarm, and they take suggestions without jealousy or insecurity, judging them on the simple standard of what works.

What bees do, and what people do in the most purpose-driven businesses and organisations, is identify “voids” of knowledge and function and fill those voids if they can. Were The Technological Republic merely a book about bees and businesses, it would be pretty interesting — in the conventional terms of what it comes out and says. But it’s not just that. It’s a book about the technological republic, or about making the republic technological, or about watching the republic become technological on its own. And this stuff about swarms and voids is where it seems to link up with moves being made within our actual republic, not in what it comes out and says but — to the reader made a little paranoid by its glaring omissions — in where it seems to point.

Musk’s young minions in DOGE are creating plenty of voids, but you can’t really expect the people still left in the government to fill those voids. They’re people. It’s the government. Government workers are unlikely to respond to the mass disappearance of their colleagues with the desperate adaptation one might expect in a private business. It’s easier to imagine that the work those ex-colleagues once did will simply go undone. It’s not like there’s an inspiring founder in every government office who will keep the fire of purpose burning in the stunned bureaucrats who survived the great culling. From what I’ve heard from insiders, a lot of the effort of these survivors is now devoted to communicating without email, for fear that they’ll inadvertently type a word from DOGE’s long list of anathemas and end up chainsawed from their jobs.

But you know what will soon be really good at filling voids, and indeed in swarmlike, hivelike fashion? AI. AI agents are terribly pragmatic even now, fluid and tireless in their search for solutions to the problems we present them, and they’re totally unworried about what other AIs think of them, and, no doubt, they’ll soon be presenting problems to themselves, identifying voids and taking the initiative to fill them. When Karp went on record as a fan of Musk’s actions to “disrupt” the government, his happy mood seemed a product of those bee and swarm and void scenarios, the idea that they were coming to life with such convenient timeliness, as if he’d scripted it all in advance.

It was an earnings call. He was speaking to investors. “We love disruption”, he said, “and whatever is good for America will be good for Americans and very good for Palantir.” I think he’s probably right about the Palantir part, and I hope he’s right about the America part. But there’s some non-zero chance that the real agents of this great disruption, which Karp is trying to hasten for the sake of his business, will end up being a species of intelligence that doesn’t care what either of us thinks, or what either of us hopes.

Disclaimer

Some of the posts we share are controversial and we do not necessarily agree with them in the whole extend. Sometimes we agree with the content or part of it but we do not agree with the narration or language. Nevertheless we find them somehow interesting, valuable and/or informative or we share them, because we strongly believe in freedom of speech, free press and journalism. We strongly encourage you to have a critical approach to all the content, do your own research and analysis to build your own opinion.

We would be glad to have your feedback.

Source: UnHerd Read the original article here: https://unherd.com/