I have always written for a living. But most of that wasn’t the fun kind. Before my first essay for UnHerd was published, just over three years ago, I mostly did the other kind of writing: the anonymous stuff that makes up the vast bulk of activities under the broad heading “professional writer”. I’ve written corporate blogs and tweets, bashed out press releases, white papers and website copy, and countless other subtypes of writing for which the commercial world will pay.

It’s dull, and not even very lucrative. Now, to make matters worse, it appears that the robots may be coming for even its meagre wages. ChatGPT, a “large language learning model” optimised for conversation, launched at the end of November, and within a week garnered over a million users. It represents a step-change in AI writing, synthesising immense bodies of information and responding to chat prompts with uncannily clear, coherent and usually fairly accurate paragraphs of reasonably well-written text.

It appears, in other words, that robot “writers” are now so advanced they can match or exceed an averagely competent human writer across a vast range of topics. What, then, does this mean for human toilers in the textual saltmines? There have been breathless reports on how robot writing has the potential to disrupt all manner of writing-related fields, from online search through the kind of “content marketing” I used to do for a living, to academic writing, cheating at schoolwork and news reporting.

Are writers destined to go the way of the artisan textile-makers who starved to death after the inception of the mechanical loom? Perhaps. But this is complicated by the fact that it was the industrialisation of writing that created the role of “professional writer” as we know it — along with much else besides. Now, the digital revolution is on its way to destroying that model of authorship — and with it, driving former denizens of the “world of letters” into new and strange cultural roles.

As the writer Adam Garfinkle has argued, the world of print was, to all intents and purposes, the democratic world of liberal norms. In the UK, literature, high finance, and a great many political norms we now take for granted emerged from the same heady atmosphere in the coffee-houses of 18th-century London. It was this explosion of competing voices, that eventually distilled into ideas of “high” and “low” literary culture, norms of open but (relatively) civil debate on rational terms — and, as literacy spread, the ideas of rationally-based, objective “common knowledge” and mass culture as such.

Print culture in the 19th century saw an astonishing volume of writing produced and devoured for self-improvement, entertainment and political engagement. Industrial workers self-funded and made use of travelling libraries stuffed with classics in translation, exhibitions and museums were popular, and public lectures could spill out into the street. These conditions also produced the “author”, with a capital A, in the sense that those of us over 40 still retain. This figure has two key characteristics: first, a measure of cultural cachet as a delivery mechanism for common culture, and second some means of capturing value directly from this activity.

But the digital revolution has already all but destroyed the old authorship model, even if a small subset of writers still manages to get very rich. De-materialising print served to democratise “authorship”, publishing, and journalism, but by the same token made it far more difficult to get paid. There are a great many bloggers and “content creators” out there; meanwhile very few authors make a living from writing books, and journalist salaries have been stagnant for years. Growing numbers of would-be writers simply head (as I did) straight to PR and communications where the money is somewhat better.

Now, when even routine PR and communications writing work can increasingly be done by robots, we can expect the terrain to mutate still further. We can expect some of the types of work I spent 15 years doing to become fact-checking work instead: a machine, after all, doesn’t know how to judge if its output makes sense. We can glimpse some of the necessity of this in the fact that Stack Overflow, a knowledge-sharing platform for organisational knowledge, has already temporarily banned input from ChatGPT because too many people were posting robot-generated content, and it wasn’t reliable enough.

But even those writers lucky enough to avoid the sense-checking saltmines will struggle to make a living unless they’re already famous. In this context, expect to see patronage making a comeback: a phenomenon that is, in fact, a reversion to the historic norm for creators. The appearance of “slam poet” Amanda Gorman at the inauguration of US President Joe Biden is only one prominent instance of the trend; I can think of a great many interesting and popular new publications, with clear cultural value, which are funded wholly or in part by wealthy philanthropists.

But this won’t be the end of ways that a mechanised acceleration in the volume of content will change the field of letters. For if the print era cultivated habits of long-form reading and thinking that profoundly structured our pre-digital culture and politics, the digital revolution has already taken us from scarcity to excess — and is taking print-era liberal discursive norms with it. In the brave new digital world, “deep literacy” is replaced by an attention economy in which every paragraph has to compete with a trillion others, meaning the incentive is for engagement: thrilling stories, grotesquerie and clickbait.

Think of the change in tone since the New York Times shifted its focus to digital subscriptions, and you’ll see what I mean. Now accelerate that a millionfold with AI-generated text. Anyone clinging to any residual optimism about the norms of objectivity or civil discourse under those conditions hasn’t been paying attention. Nor have those still optimistic about free speech and the marketplace of ideas. For along with an attention economy comes attention politics: the tussle to control what people notice, or who gets a platform, in which some censorship is now inevitable.

We may decry this as “illiberal”, but it’s not wholly unjustified. For while the Twitter Files have revealed just how consequential control of the censorship mechanisms can be, few would deny that “misinformation” is, under digital conditions, genuinely easy to manufacture and spread. Consider, for example, the sophisticated propaganda campaign, powered by bot farms, through which Chinese authorities propagandised Western electorates into demanding their governments introduce China-style Covid lockdowns.

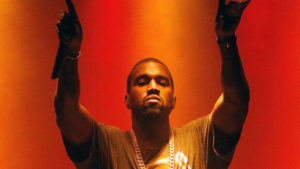

In this context, “speech” itself fades in significance, relative to control over the algorithmic parameters of speech. And the fight is on over who sets the rules governing which opinions the algorithms are allowed to index, transmit, learn from – or suppress. The writer James Poulos calls this “catechising the bots”. The necessity of such catechism was learned the hard way, in 2016, when Microsoft released Tay, a chatbot that learned from user input fed in via Twitter. Within a day, mischievous contributors had “taught” Tay to say Nazi things of the kind that recently got even Kanye West cancelled.

In this sense, AI design is rapidly taking on an explicitly moral dimension, as a kind of post-human curator of the “common culture”. One excited tech CEO recently celebrated this as a positive change, saying of Chat-GPT that “It’s just like a human being except it has all the world’s knowledge.”

In the 18th and 19th centuries, then, the Author with a capital A enjoyed status as guardian of and contributor to the common culture. Today, though, we’ve long since ceded that terrain to robots, and to the cyborg theologians who catechise them. No matter how fast you read, there’s no competing with a machine that can instanteously index and synthesise a century’s books; an entire generation has now grown up with Google. Chat-GPT just formalises robots as curators of the consensus view. What’s left, then, for human authors to contribute?

To state the obvious, there are still plenty of things those robots can’t do besides fact-checking — not least because of their catechism. If you want the bland, normative, politically unimpeachable top-line consensus on any given topic, ask Chat-GPT about it and you’ll get three paragraphs of serviceable prose. That’s all well and good for cheating in a school essay, but bland, faceless, normative views aren’t the only kinds of writing for which there’s an appetite.

In the AI age, expect human writers to specialise in the esoteric, tacit, humorous or outright forbidden speech that robots either can’t capture (ChatGPT is reportedly rubbish at jokes), or that “AI bias” teams work hard to scrub from the machine. As one Right-wing anon put it (appropriately colourfully) recently, one way to prove you’re human as a writer in the Chat-GPT age will be to say incredibly racist things.

If I’m right, we can kiss goodbye to what’s left of the print-era connection between “author” and “authority” in the sense of generally-accepted objectivity. But I expect many creators to thrive anyway. It’s just that instead of trying to beat the machine at synthesising the consensus, humans will be prized for their idiosyncratic curation of implicit, emotive, or outright forbidden meanings, amid the robot-generated wasteland of recycled platitudes.

Successful creators in this field may not even be writers in the old sense; at the baroque end of this emerging field, we might place Infowars host Alex Jones, while at the more mainstream-looking end, Elon Musk’s chosen Twitter Files mouthpiece, Bari Weiss. Whatever you think of the narratives either produces, they are less “authors” in the old sense than sensemakers amid the digital noise.

All of this will feed into the political cleavage I described last week: the emerging disagreement over whether it’s wiser to trust the judgement of human individuals, or the inhuman (and catechised) synthetic consensus of the machine. This divide, which I characterised as “Caesarism” vs “Swarmism” is, in practice, not really about despotic governance as such. (The Covid era was, after all, a sharp object lesson in how tyrannical swarmism can be.) Rather, it’s about whether we think there’s anything of value in individual human judgement, despite (or perhaps because of) its mess and bias and partiality.

So the world of post-liberal letters will displace the human element from consensus “common culture”. Instead, some humans will be employed sanity-checking the machine consensus, others will tussle over the right to catechise the bots, and others again will forge high-profile careers curating new kinds of sensemaking. Meanwhile, we’ll see the moral status of human sense-making grow ever more politicised, as elites fight over whether or not all of culture can (or should) simply be automated — however nightmarish the result so far whenever this has been tried.

If something remains distinctively the domain of human creators in this context, it’s not curating common knowledge but the hidden kind: the esoteric, the taboo, the implicit and the mischievous. The machine still can’t meme. May it never learn.

Disclaimer

Some of the posts we share are controversial and we do not necessarily agree with them in the whole extend. Sometimes we agree with the content or part of it but we do not agree with the narration or language. Nevertheless we find them somehow interesting, valuable and/or informative or we share them, because we strongly believe in freedom of speech, free press and journalism. We strongly encourage you to have a critical approach to all the content, do your own research and analysis to build your own opinion.

We would be glad to have your feedback.

Source: UnHerd Read the original article here: https://unherd.com/