A casual consumer of scientific journalism could be forgiven for thinking that we are living in a golden age of research. Systematic evidence, however, suggests otherwise. Breakthroughs comparable to the discovery of DNA — only 70 years ago — have been all too rare in recent decades, despite massive increases in investment. Scientific work is now less likely to go in new directions, and funding agencies are less likely to bankroll more exploratory projects. Even in those areas where scientific progress is still robust, making discoveries still takes a lot more effort than it did in the past. The cost of developing new drugs, for example, now doubles every nine years.

Experts disagree on what has been holding science back. A common explanation is that potential discoveries are fewer and harder to find, absolving scientists, and institutions, from responsibility. In reality, similar complaints have been made in nearly every era, for example by late 19th-century physicists on the brink of discovering relativity. And such explanations can be self-fulfilling: it’s harder to get funding for ambitious exploratory work deemed infeasible by your peers.

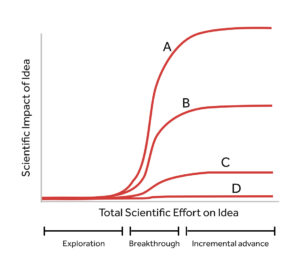

To understand the slower pace of discovery, it is crucial to understand the process by which scientific breakthroughs happen. It can be illustrated by a surprisingly simple three-phase model. First, in the exploration phase, if a new scientific idea attracts the attention of enough scientists, they learn some of its key properties. Second, in the breakthrough phase, scientists learn how to utilise those key properties fruitfully in their work. Third, in the final phase, as the idea matures, advances are incremental. It still generates useful insights, but the most important ones have been exhausted; much of the work in this phase focuses on the idea’s practical applications.

Scientists are quite willing to work on ideas during the breakthrough phase — after all, everyone wants in on a project with good prospects. They are also willing to work on mature ideas, to reap the social benefits of successful ideas. But working on novel ideas exposes a scientist’s career to considerable risk, because most of them fail. This bias against exploratory science is a critical driver of the field’s stagnation, because the greatest risks often come with the greatest rewards. For example, researchers who sought to first edit genes in mammalian cells in 2011 considered CRISPR technology a risky choice, because the technique was still in many ways undeveloped. Today, by contrast, it is one of the most celebrated advances in biomedicine.

The graph below shows the development of four hypothetical ideas — A, B, C and D — through the three stages of this model. Given sustained scientific effort in the exploration phase, ideas A and B will develop into important advances; idea A’s S-curve is steeper in the breakthrough phase, meaning it is of the most significance to the broader scientific community. By contrast, ideas C and D will never amount to much, no matter how much effort is expended on them. The problem for scientists is that, in the exploration phase, the potential impact of all four ideas could appear nearly identical.

This bias against exploratory science points to a critical driver of scientific stagnation: scientists are frequently reluctant to spend their time exploring new ideas and have increasingly turned their attention to incremental science. This is backed up by quantitative evidence. University of Chicago biologist Andrey Rzhetsky and his colleagues found: “The typical research strategy used to explore chemical relationships in biomedicine… generates conservative research choices focused on building up knowledge around important molecules. These choices [have] become more conservative over time.” Another paper by the same team (led this time by UCLA sociologist Jacob Foster) also reports: “High-risk innovation strategies are rare and reflect a growing focus on established knowledge.” Meanwhile, a recent analysis by University of Arizona sociologist Russell Funk and his colleagues tracks a “marked decline in disruptive science and technology over time”, and attributes this trend to scientists relying on a narrowing set of existing knowledge.

And yet, the underlying risk of failure in exploratory research has always been a feature of scientific investigation. So why is it dictating scientists’ behaviour more than ever? In short, because of the change in the way their success is measured. The rule for research scientists used to be “publish or perish”. First articulated in the Forties, this notion designates a scientist who publishes many papers as “productive”. But in recent decades, the importance of this metric has faded. Now, the popularity of a given article trumps all, and popularity is measured by the number of times other scientific papers cite it. Like the sports statistics sites that report the batting averages of professional baseball hitters, services such as Google Scholar and Web of Science report the extent of a scientist’s influence, valuing their work based on the number of citations it has garnered. The new mantra is: be influential or be sidelined.

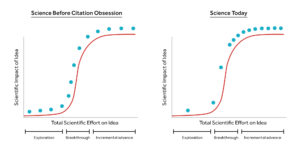

This fixation has decreased the incentive to engage in exploration. When a scientist ventures into an emerging area of investigation, the work is unlikely to garner many citations, because few other scientists will be working on related topics. By contrast, as a successful idea matures, the relative certainty of making discoveries — however incremental — attracts more scientists to the area, and if many people are working in that field, their work will receive more citations.

The figures below illustrate the shift in research priorities following this change in scientist incentives. Before the citation obsession, a healthy proportion of scientists was willing to engage in exploration. Today (right panel), scientists are less willing to play with new ideas, and instead pursue incremental advances. This swing in scientist effort has been costly, as fewer ideas have developed into breakthroughs.

Noubar Afeyan, a co-founder of Moderna, is one of many scientists to note that incremental advances are the norm in academia today. He urges reforms to foster a culture in which scientific leaps receive more encouragement — and are rewarded to some extent even when they fail.

One way to achieve this involves indexing the text of a research publication based on the words and word sequences that appear — a process that reveals a list of the ideas upon which an article builds. A paper that relies on more recent ideas is more likely to reflect exploratory science — and services such as Google Scholar should report novelty measures in addition to citation metrics. While those novelty measures are not perfect, they are no more flawed than citation metrics as a measure of scientific influence, and offer one more way to evaluate work. A sports page that reports only batting average would provide a very incomplete picture of the value of a home-run-hitting slugger who strikes out frequently. Scientific evaluation services should similarly avoid presenting an incomplete picture.

An alternative, and idealistic, response would be to stop measuring scientific impact at all — but in reality, this is infeasible. Scientists, like other high-status professionals, cannot escape today’s relentless performance quantification.

Moreover, when used correctly, metrics can serve a useful purpose in allocating limited research dollars. Here, too, scientific endeavour will require a shift. As well as university administrators, funding agencies should start using new novelty metrics when making hiring, tenure, and promotion decisions. Doing so will greatly increase the incentive for scientists to pursue exploratory work. Science as a discipline would become more hospitable to people who might be staying out of academia for fear that exploration is punished rather than rewarded.

Fortunately, at least in the United States, this goal enjoys cross-partisan support. The Biden administration’s first budget, for instance, included $6.5 billion for the founding of the ARPA-H funding model of biomedicine — which seeks to support “high-risk exploration that could establish entirely new paradigms”, by taking funding decisions away from influence-obsessed peer-reviewers. Still, this is merely an incremental step in the right direction; it directly affects the incentives of only a small subset of biomedical researchers engaged with the ARPA-H initiative. To truly reignite biomedical science, fundamental change is needed in the career incentives faced by a larger share of the research workforce.

The development of the mRNA Covid vaccines is an excellent example of what scientists can do when provided with the incentives to pursue novel work. It shows, too, that important discoveries are often the result of a sustained effort by a large community. Katalin Karikó, perhaps the most celebrated of the scientists who worked on the mRNA vaccines, credits hundreds of others for contributing to the effort. But these ideas only develop from infancy to breakthrough if many researchers are willing to try them out, develop them, and debate their merits. When many others are willing to take the risk of trying out new ideas, individual scientists are more likely to get involved.

The history of mRNA vaccine technology also provides evidence of the hostility offered to novel ideas within science. Key research papers related to the early development were time and again rejected by the leading scientific journals, which fear losing status if they publish too much exploratory science, because it’s less likely to go anywhere. Yet as we have seen, it is nearly impossible to understand the feasibility and significance of new ideas in their infancy, when they are still raw and poorly understood. And the rejection of innovative work by top journals renders negative judgments self-fulfilling: without the attention of other scientists, many ideas die in obscurity regardless of their potential.

It is time, then, to put science back on the path envisioned by the engineer Vannevar Bush in his 1945 report for President Roosevelt. Motivated by the loss of academic freedom during the Second World War, it emphasised the importance of open-ended exploration for scientific progress. His view that many valuable discoveries remain to be found was reflected in the title of Bush’s report, “Science: the Endless Frontier”. In the decades since, a monomaniacal focus on influence in research evaluation has fettered this exploration. If we valued it more highly, our frontiers might start to expand again.

Disclaimer

Some of the posts we share are controversial and we do not necessarily agree with them in the whole extend. Sometimes we agree with the content or part of it but we do not agree with the narration or language. Nevertheless we find them somehow interesting, valuable and/or informative or we share them, because we strongly believe in freedom of speech, free press and journalism. We strongly encourage you to have a critical approach to all the content, do your own research and analysis to build your own opinion.

We would be glad to have your feedback.

Source: UnHerd Read the original article here: https://unherd.com/